Why use a Transit Gateway

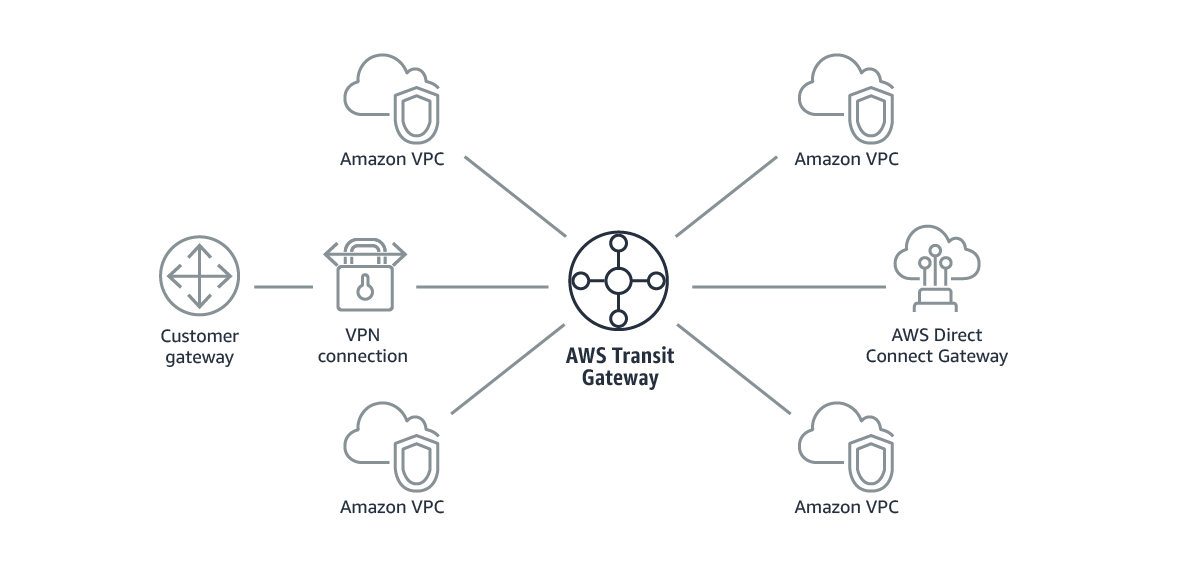

You may see in my designs and discussions that I always use an AWS Transit Gateway for connections outside of the VPC.

While there are use cases where this does not make sense, which I'll describe, for the majority of organisations' use cases I believe that using Transit Gateways is the preferable solution.

Cost 💰

Firstly lets address the cost implications of using an AWS Transit Gateway over VPC Peering, as many will use this to justify using peering because they see it directly on their bill.

Transit Gateways have 2 charging mechanisms. Firstly is VPC Attachment charge and second is data processing. A VPC Attachment is charged per hour, at between 5¢ and 7¢ dependent on region. This means that the use of a Transit Gateway can cost between $36.5 and $51.1 a month per VPC. As you can see if you start having 100's of VPC this can be expensive. VPC Peering on the other hand do not charge for peering to be in place. For many this can be seen as the sole reason to not use a gateway. For Data Processing there is a flat rate that is charged of 2¢/GB within region. If data leaves the region there is then a flat rate of 2¢/GB for inter region data transfer.

For example a Transit Gateway in Sydney region (ap-southeast-2) attached to 3 VPCs with combined monthly usage of 10GB over a month between all 3 VPCs and multiple AZs will cost $153.50.

VPC Peering only has a single charging method of data transfer, although it can get complicated. If data remains within the same AZ in a region then there are no transfer charged. However, if data does not stay in the same availability zone, then there are charges for Inter-Zonal data transfers. This is charged at 1¢/GB in each direction.

So for the same 3 VPCs in Sydney region (ap-southeast-2) connected by VPC Peering with 10GB over a month between all 3 VPCs the monthly cost would be $0.20.

As you can see the impact of Transit Gateway in "simple" networks is high from a cost perspective even with low network utilisation. This is due to the attachments costs which are static.

So if you are only focused on cost, and have a small network, then peering is probably a better option for you to manage networking in AWS.

Its important to note that costs for on-premise connectivity may be more when not using AWS Transit Gateway. (See below)

Network Throughput 🚿

It was always quoted that if you had large data volumes then use VPC Peering connections, even if you used a Transit Gateway for other traffic, to get the increased capacity that a VPC Peering could offer.

So lets look at a large data hungry application that is moving several GBs a second between EC2 instances in different VPCs, say logs being sent to central logging servers.

For peering connections there is no restriction to bandwidth between the two instances over the instance network capacity. However, if data is sent in a single flow then EC2 instance bandwidth will be applied. This would mean that EC2 would restrict to either 5GB or 10GB per flow (see documentation). So for our example if we had 20 collector nodes running on instances with 5Gb network capacity (ec2 network performance information) talking to a cluster of 8 central nodes running on instances with 12.5GB network capacity there would be no throttling from a network perspective, although throughput may not be 100Gbps.

For a Transit Gateway if we look at the quotas we can see that the bandwidth is per connection. So if traffic was just between 2 servers then, subject to instance and flow limits, we could achieve 50Gb/s transfer. However, if we look at our many to one example, while capacity would not be throttled from the collector VPCs to the Transit Gateway, the connection to the VPC with the central nodes would be limited to 50Gbps.

Although I have used logging in this example, where there may be alternatives to move large data volumes or latency might be tolerated, the principle is that if large data movements are needed then VPC Peering might be the only way to achieve the desired through put.

Connectivity - VPN 🔐

In a simple AWS only environment, cost and throughput might be all we need to worry about in terms of deciding how to implement networking. However, few AWS landscapes are simple, and as you look into more traditional enterprises, the requirement that is external connectivity begins to complicate networking.

First lets look at VPNs. These are often used in the infancy of a move to the cloud as the are relatively simple, quick, and cheap to implement. In these scenarios the cloud estate is usually small POC or Non-Production, and traffic is easy to manage with separate VPNs per VPC as traffic is tightly controlled. However, as organisations move to larger estates with more complicated workloads the number of VPCs increases.

If we only use VPC Peering, and VPN connectivity is still needed outside of AWS, there are only then two options we can implement. Either each VPC needs it's own VPN to be establish, or a central account is needed to house the VPNs and a proxy to manage the transit traffic between VPCs.

If each VPC has its own VPN set up then there is a management overhead and an increase in cost as VPN connections are charged at 5¢ an hour ($36.5 a month). In addition the on-premise solution needs to be able to support the increased number of VPNs. For an estate of 3 VPCs with resilient connections this would be an additional cost of $146.

If we decided to have a central "transit VPC" then we would need a proxy solution to manage the traffic and connectivity to more VPCs. Even using a relatively cheap t-family instance will cost around $100 a month, and add administrative overhead.

If however we use a Transit Gateway we can use the gateway to terminate the VPN. This means that the solution is scalable as an increase in VPCs does not require extra VPN and, although Transit Gateway Attachment costs, does not increase the cost to provide the connectivity.

It's also important to note that even if multiple VPNs are configured on a Virtual Private Gateway that the aggregate throughput is still limited to 1.25Gbps where the same Customer Gateway is used. For a Transit Gateway up to 10Gbps can be achieved using ECMP (Equal Cost Multi-path) routing combining multiple VPNs to a single Customer Gateway.

Connectivity - Direct Connects 🔌

Now lets look at the second option for connectivity and that is Direct Connect.

As organisations network requirements grow, the throughput and latency of a VPN is generally not sufficient for the demands of the workloads. We can combine multiple VPNs on a Transit Gateway, but latency would not be improved. However, by moving to Direct Connect, capacity can be increased up to 100Gbps. More importantly as a dedicated and private connection, the latency impact of an internet VPN can be removed, even on low speed (sub 1Gbps) hosted connections.

If we utilise VPC Peering, then there are two ways we can connect our Direct Connect to our VPCs. We can either link the Virtual Interface direct to the VPC, or we can terminate the connection on a Direct Connect Gateway and attach that to the VPC. In both scenarios, the hosted connection interface or a single interface on a dedicated connection is linked to a VPC's Virtual Private Gateway. This is critical to account planning as a hosted connection only has a single interface and a dedicated connection can only have 50 private interfaces. In addition, if using hosted connections the bandwidth is not shared between multiple connections, as a result you need to sure each connection is sized for peak work load.

Using a Transit Gateway simplifies this as a single Transit Interface is connected to the gateway, either directly or via a Direct Connect Gateway. As a result only a single link needs to be configured and the capacity can be shared between VPC reducing wasted capacity if on a hosted connection. Another benefit of a Transit Gateway for connections is as VPCs are created and deleted there is no configuration required from network teams as the connection between on-premise and the Transit Gateway remains.

Routing 📡

We've seen in the previous sections things can start to get complicated to understand and manage when using networking components. In this section lets look at the impact on routing for the various connectivity components.

We'll look at a two common scenario for AWS networking. The first scenario is where inbound and outbound internet is housed in the same VPC as the application and external VPC connectivity is only needed to talk to other applications or on-premise over a VPN. The second scenario is where we have centralised inbound and outbound internet solutions, as well as inter VPC and on-premise communications. In both scenarios we have 10 application VPCs in a single region, on-premise using a VPN, and for IP ranges we will use a Prefix List to simplify the management of on-premise IPs and VPCs will be in the region of 172.16/12 range with a /22 CIDR.

Scenario 1

For VPC peering we have what seems like a relatively simple route table. In addition to the VPC CIDR as a Local Route there would only be a few extra entries. The default route (0.0.0.0/0) will point to either the Internet Gateway or NAT Gateway in the account. On-Premise routing will use the Prefix List if the VPN is static or BGP if dynamic routing is in place. The only additional routes that are then needed would be for each of the 9 peering connection to point the VPC's CIDRs to the relevant connection. As a result the route tables (1 private and 1 public) would have 12 entries each.

For the Transit Gateway networking these route tables are simplified even further. The default routes would stay the same, as would the VPC CIDR route. However, now we would not need 9 routes for each of the peering but instead a single route pointing to the Transit Gateway. As all VPCs are in the same range we can just have a route for 172.16.0.0/12 which will mean traffic for any VPC will be directed to the Transit Gateway. For the VPN we will terminate this on the Transit Gateway so we also need a route for the Prefix List pointing to the Transit Gateway. As a result the route table has shrunk from 12 to 4 entries.

Scenario 2

As we move to a centralised solution, it appears to be a simple change to the VPC Peering Option. All we need to worry about is the connection to the new centralised solution. We just need to remove the public subnet and its route table, and then in the private routable update the default route to be the security VPC peering. If only it was that simple. If we just set the route to be the VPC it would land in the VPC and be rejected as the VPC will not allow transient routing. So what other options do we have. Well the only option in this case would be to have a load-balancer in the centralised solution VPC and use a VPC endpoint for this communication as you can not reference resources between VPCs in a route table. As we are using a VPC Endpoint we dont need the peering to the centralised solution so now the VPC has a single route table which has 11 routes defined.

For Transit Gateway we run into a similar issue but lets see how we address it. As with peering we remove the public subnet and public route table. We now turn our attention to the private route table which currently has 4 entries (Local CIDR, Default Route, Prefix List and 172.16.0.0/12). As the default route, on-premise and all VPC traffic is over the Transit Gateway we can simplify this from an application VPC perspective to just have it's default route point to the Transit Gateway. This has now reduced the route table to 2 IP addresses. So how will the traffic that was via a NAT/Internet gateway get to the central solution? Well there are 2 things that are needed. Firstly in the Transit Gateway Route Table we add an entry for the default route pointing to the solutions VPC. Then, in the VPC we amend the route table of the subnets where the gateway is attached and point it to the interfaces of the solution (Instance or Load Balancer) which will then act as the gateway.

Security 🔒

So let's look at the security implications of the two networking solutions as often network controls are used to secure workloads.

When we look at all the security that can be applied to routing such as Network Access Controls (NACLs) and Security Groups these can equally be applied to VPCs with both Transit Gateways and Peering. So are there any security benefits or disadvantages either way?

Well, the obvious one is that peering connections offer the ability to reference security groups between VPCs. Great! Every time a new workload comes up in a connected VPC I can updated my security groups to allow them in by referencing their security group. Well, that is true in principle but the question is how are you going to apply it? If we look at the move to architectures such as micro-services, event driven architecture, and API first communication, along with methodologies such as GitOps, DevSecOps, and Zero Touch Deployments this is very hard to achieve. With out building tightly coupled code one application may not even know of the existence of another, let alone that it is hosted in a connected VPC.

So what else is there?

Well for me there are several security benefits of a Transit Gateway, especially with centralised connectivity solution such as proxies, WAF, etc.

Firstly is the fact that I can centrally manage all networking. This allows we to block developers or whole accounts from creating components such as an Internet Gateways of VPN connections that could compromise an account. I can then also manage what they are allowed to get to by having approval for updates to central ingress/egress systems or route tables.

Second is the fact that I can force the segregation of environments such as Production and Non-Production. By blocking any VPC peering I ensure that VPCs can only talk to other VPCs that are connected to the same Transit Gateway and have the same Transit Gateway Route Table. The only thing I have to do is share the Transit Gateway if in a different account and give the ability to attach to the VPC.

Finally is I have greater speed to deal with threats or compromises. By managing routing in a TGW and centralised security I can block communication with suspicious IPs, isolate a VPC, inspect traffic logs at the gateway level plus enforce scanning for things such as virus and PCI.

Quotas 📃

So lets look at where quotas might play a part and mean that a Transit Gateway is the only possible method to connect.

First lets look at a scenario with just inter-VPC communications.

The number of peering connections allowed per VPC is adjustable from the default of 50 to a maximum of 125 (see quota). This means that in large networks of over 125 VPCs a full peering mesh would not be possible, and alternative method would be needed to allow all VPC to communicate. In addition, while peering connections can be cross-region, the quota is at the VPC level.

On the other hand a Transit Gateway can have up to 5,000 attachments (see quota) which means that 5,000 VPCs can connect to a single gateway. In addition Transit Gateways can be peered with up-to 50 other Transit Gateways. This would allow Transit Gateways to peer with other regions at a gateway level or, if attachment limits were hit, VPC connecting via peered gateways. This would increase the limit to a maximum of 250 thousand connected VPCs.

Now lets look at a scenario with on-premise communications.

Using the scenario of a single hosted Direct Connect, connected via Direct Connect Gateway, we could chose to attach to a Transit Gateway or directly to VPC via a Virtual Private Gateway. In these scenarios the Direct Connect quotas need to be looked at.

In the case of Virtual Private Gateways there is a limit of 10 connections per Direct Connect Gateway. This would mean that a maximum of 10 VPCs could be attached and over that there would need to be a routing solution implemented similar to the VPN scenario earlier. As above, even t-family instance will cost around $100 a month, and add administrative overhead.

For Transit Gateways, the limit is 3 connections per Direct Connect Gateway. Although this is significantly lower, as a Transit Gateway can have 5 thousand associations, even with a single Transit Gateway we can have 500 times more VPC connections that using Virtual Private Gateways.

Conclusion 🏆

So we've looked at a lot of items and hopefully can see the use cases for both VPC Peering and Transit Gateways. But in most cases you can only choose one option.

So which is it to be?

Lets use the trusted AWS Well-Architected Framework pillars to help us decide. We'll look at each pillar and see what solution comes top in that pillar and then crown and overall winner.

Operational Excellence 🛠️

So for me Transit Gateways win on this pillar. Implementation is significantly simpler as the number of workloads increases. That along with the ability to monitor traffic on the gateway ensures that monitoring and troubleshooting connectivity can be implemented separately or in addition to VPC level solution.

Security 🔒

So while it would seem beneficial to having the ability to cross-reference security groups between VPCs I see this as being less practical than it was 10 years ago. With many organisation "All-In on AWS" there can be too many team, and deployment methods, to benefit from this feature. In addition, I believe that the extra security Transit Gateways can offer outweighs the loss of the security group functionality

Reliability ⛈️

As both option are AWS services this pillar is quite a tight battle. For me they are equal on 4 out of 5 design principles. The tie-breaker principle is "Stop guessing capacity". On the one hand VPC Peering has no network capacity restrictions, but Transit Gateways can make better use of shared connections such as VPN and Direct Connect. Digging deeper into some of the best practices we see that Transit Gateway starts to sway the balance. When looking at Quota's Transit Gateway is a big winner with number of VPCs that can be connected. Taking these into account I think Transit Gateway just wins on this pillar.

Performance Efficiency 🚀

So performance efficiency is an interesting pillar for connectivity. Initially it would seem that VPC Peering would win as it has the greater throughput. But is that all that makes something performant? If we look at monitoring performance Transit Gateway significantly simplifies that. If we then look at everything apart from through-put again Transit Gateway makes that easier to manage and implement. So for me Transit Gateway wins but with the caveat of using a VPC Peering Connection if throughput capacity is required.

Cost Optimization 💰

So cost will always be the hard one to argue. You can look at the surface and say Transit Gateway cost on average $40 per VPC per Month and VPC Peering costs nothing. But what costs do we save? If we centralise connectivity solutions such as internet access and VPN access. Internet access could save ~$100 per VPC for the removal of NAT Gateways ($120 average 3 AZ NAT Gateway cost minus cost to provide central solution), a significant amount if 100's of VPC with just minor internet access. VPN de-duplication would save ~$40 per VPC with the same VPN. Finally, a shared Direct Connect could reduce over-provisioning links especially on Hosted Connections. As an example, rather than 20 50Mbps links a single 1Gbps would give savings of 50% or a reduce capacity 500Mbps would provide 66% savings.

Sustainability 🌿

The biggest thing for sustainability is to not have something running or reduce the effort needed to run it. And then once you've decided what to run make sure you maximise utilisation. For networking the effort principle manifests itself in two ways. Firstly the number of connections that are established and then the effort required to route the traffic. If we look at the number of connections for 50 VPCs in a single region to communicate with no external access (internet or on-premise) we can see that for VPC peerings we would have 1225 connections (each VPC connected to 49 others) and route tables with a total of 2450 entries. When using Transit Gateways this number would be reduced to 100 connections (VPC and Gateway ends of the connection) and 100 route table entries. This reduction greatly reduces the energy needed to perform routing. So again, for this pillar I think Transit Gateway is a clear winner.

And the winner is...

Transit Gateway 🥳

I'm always glad when my thoughts built up over the last decade are justified. But hopefully your all in agreement, at least based on the information I've provided, that Transit Gateways are the best solution, in most cases, for networking.

If you disagree with any of the points I've made please let me know and even after 10 years I'm always learning how new services or features change the possibilities.

For more information on transit gateways take a look at the AWS Documentation.

Member discussion